Dec 03, 2012 With the Hardware setting most graphics instructions are handled by the GPU. Many laptop GPUs are not capable of hardware rendering and so you have to switch to software. In some cases, even though the GPU is capable of handling the instructions, the render quality is better via software. Edited December 3, 2012 by myguggi. Plugin author stopped working on OpenCL few months ago and it's currently far worse than hardware rendering. Main reason why PCSX2 software rendering is better is because software mode is coherent automatically, since it can use the textures directly out of memory.

High-definition image processing is the life-blood of today’s VFX, graphic design, industrial design, and animation industries. When working in one of these industries, the most important tool in your arsenal is your workstation. The Central Processing Unit (CPU) is the heart of your workstation and handles a lot of tasks, like executing applications, loading drivers, etc. Graphics Processing Units (GPUs), which are specialized types of microprocessors that run parallel to the CPU, have recently seen a significant rise in usage as massive calculations that are needed for a single task begin to grow. These processor-intensive tasks may include:

- Gaming

- 3D visualization

- Visual effects

- Image processing

- Big data

- Deep learning / AI

For the sake of not overcomplicating this article or its purpose, we’ll be exclusively referring to comparing CPUs and GPUs used for image processing, or in this case, image rendering. Hopefully after reading this, you will have a better, more comprehensive understanding of what these types of rendering solutions can offer you and your studio and assist you in making a more educated decision as to what is a better option for your projects.

| CPU Rendered Result | GPU Rendered Result |

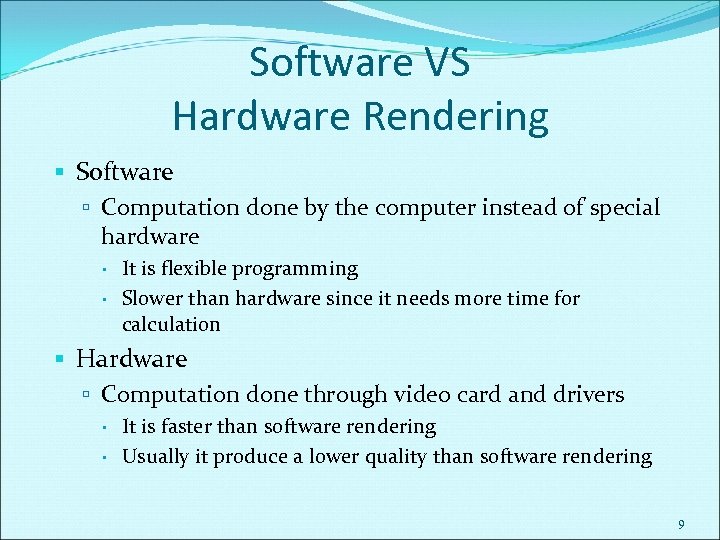

Software Vs Hardware Rendering Process

Contents

CPU vs. GPU Speed

The first and most obvious factor to address is speed. While a CPU has a limited number of processing cores (averaging at around 24) that make it efficient at sequential serial computing and carrying out processes one at a time, GPUs consist of smaller cores in greater quantity than that of an average CPU, allowing your workstation to perform multiple tasks at the same time much more quickly.

Modern GPUs have increased in their output capabilities since they were first introduced. Where CPUs generally are able to handle individual specific tasks in sequence, GPUs offer superior memory bandwidth, processing power, and speeds up to 100 times faster to tackle several tasks that require multiple parallel computations and large caches of data.

Hours of processing can be displayed in minutes and can streamline the design process when using GPUs. If speed is the main priority in your workflow, GPU-based rendering is the preferred solution.

| CPU Render Time (18.4 minutes) | GPU Render Time (6.5 minutes) |

Software Vs Hardware Rendering Technology

CPU vs. GPU Initial Graphic Fidelity

Rendering is a time-consuming process, but quality can’t be rushed. Though it might take hours (maybe even days) to finish rendering an image, traditional CPU-based rendering is more likely to deliver higher image quality and much clearer images that are devoid of noise.

A GPU has many more cores than a CPU, but overall, each core runs slower compared to a CPU core. When several CPUs are interconnected and put to use in a render farm-like environment, for example, they can potentially produce a more exquisite final result than a GPU-based graphics solution could. In film, this is the usual standard for producing high-quality frames and images as there is no hard limit to rendering.

On the other hand, with affordable VR on the rise, games are also becoming much more immersive, and with immersion comes high-quality image rendering and real-time processing that can put a workstation to the test. To put it simply, modern games and VFX are just too taxing for a CPU graphics solution anymore.

If you’re willing to take your time and aren’t pressured by deadlines to get the very best possible image, then CPU-based rendering may be what you’re looking for.

| CPU Rendered Result (Intel i7) | GPU Rendered Result (Nvidia CUDA GPU) |

CPU vs. GPU Cost

As hardware becomes more impressive, its price also becomes a deciding factor.

In addition to speed, the power of a single GPU can be the equivalent of at least five to ten CPUs. This means the power of a single workstation can perform the tasks of several CPU-based workstations put together allowing independent artists and studios the freedom to create, design, and develop high resolution images at home. Furthermore, GPUs offer a significant decrease in hardware costs and eliminate the need for multiple machines to produce professional quality work that can now be made in minutes instead of hours.

Without the need for expensive CPU render farms, individual creators can afford and rely on their own GPU workstations and have studio-quality work for a fraction of the cost.

CPU vs. GPU Real Time Visualization

With certain workflows, particularly VFX, graphic design, and animation, it takes a lot of time to set up a scene and manipulate lighting, which usually takes place in a software’s viewport. A workstation’s GPU can drive viewport performance in your studio’s software, allowing for real time viewing and manipulation of your models, lights, and framing in three dimensions. Some GPU-exclusive rendering software can even allow you to work completely in a rendered viewport, increasing your output and minimizing potential errors that may arise from rendering in another program.

It’s very clear to see the benefits of working and rendering with GPU-accelerated machines compared to the traditional CPU-based workstations that can slow down production or limit project budgets due to potentially necessary upgrades.

Making the Choice Between CPU and GPU Rendering

| Comparison | CPU | GPU |

| Speed | ✘ | ✔ |

| Initial Graphic Fidelity | ✔ | ✘ |

| Cost | ✘ | ✔ |

| Real Time Visualization | ✘ | ✔ |

Keep in mind that GPUs aren’t intended to completely replace the need for CPU workstations and workflow. It may appear that the benefits of CPU-based rendering pale in comparison to the benefits of GPU-based rendering, but it ultimately depends on what you or your studio need. These processing units live and operate in a synergistic harmony. The GPU is not to replace, but to accelerate and streamline existing practices and workflows, maximize output, and offset processor-heavy computations in applications that would bog down a system without them.

Even with the fastest, most powerful GPUs at your disposal, the CPU is still pulling its share of the weight. To the untrained user, it will just appear that your applications run much faster and smoother. Using these tools in tandem will do so much more for your work and presentations, and greatly increase your machine’s ability to quickly bring your creations to life. Happy Rendering!

Software rendering is the process of generating an image from a model by means of computer software. In the context of computer graphics rendering, software rendering refers to a rendering process that is not dependent upon graphics hardwareASICs, such as a graphics card. The rendering takes place entirely in the CPU. Rendering everything with the (general-purpose) CPU has the main advantage that it is not restricted to the (limited) capabilities of graphics hardware, but the disadvantage that more semiconductors are needed to obtain the same speed.

Software Vs Hardware Rendering Software

Rendering is used in architecture, simulators, video games, movies and television visual effects and design visualization. Rendering is the last step in an animation process, and gives the final appearance to the models and animation with visual effects such as shading, texture-mapping, shadows, reflections and motion blurs.[1] Rendering can be split into two main categories: real-time rendering (also known as online rendering), and pre-rendering (also called offline rendering). Real-time rendering is used to interactively render a scene, like in 3D computer games, and generally each frame must be rendered in a few milliseconds. Offline rendering is used to create realistic images and movies, where each frame can take hours or days to complete, or for debugging of complex graphics code by programmers.

Real-time software rendering[edit]

For real-time rendering the focus is on performance. The earliest texture mapped real-time software renderers for PCs used many tricks to create the illusion of 3D geometry (true 3D was limited to flat or Gouraud-shadedpolygons employed mainly in flight simulators.) Ultima Underworld, for example, allowed a limited form of looking up and down, slanted floors, and rooms over rooms, but resorted to sprites for all detailed objects. The technology used in these games is currently categorized as 2.5D.

One of the first games architecturally similar to modern 3D titles, allowing full 6DoF, was Descent, which featured 3D models entirely made from bitmap textured triangular polygons. Voxel-based graphics also gained popularity for fast and relatively detailed terrain rendering, as in Delta Force, but popular fixed-function hardware eventually made its use impossible. Quake features an efficient software renderer by Michael Abrash and John Carmack. With its popularity, Quake and other polygonal 3D games of that time helped the sales of graphics cards, and more games started using hardware APIs like DirectX and OpenGL. Though software rendering fell off as a primary rendering technology, many games well into the 2000s still had a software renderer as a fallback, Unreal and Unreal Tournament for instance, feature software renderers able to produce enjoyable quality and performance on CPUs of that period. One of the last AAA games without a hardware renderer was Outcast, which featured advanced voxel technology but also texture filtering and bump mapping as found on graphics hardware.

In the video game console and arcade game markets, the evolution of 3D was more abrupt, as they had always relied heavily on single-purpose chipsets. 16 bit consoles gained RISC accelerator cartridges in games such as StarFox and Virtua Racing which implemented software rendering through tailored instruction sets. The Jaguar and 3DO were the first consoles to ship with 3D hardware, but it wasn't until the PlayStation that such features came to be used in most games.

Games for children and casual gamers (who use outdated systems or systems primarily meant for office applications) during the late 1990s to early 2000s typically used a software renderer as a fallback. For example, Toy Story 2: Buzz Lightyear to the Rescue has a choice of selecting either hardware or software rendering before playing the game, while others like Half-Life default to software mode and can be adjusted to use OpenGL or DirectX in the Options menu. Some 3D modeling software also feature software renderers for visualization. And finally the emulation and verification of hardware also requires a software renderer. An example of the latter is the Direct3D reference rasterizer.

But even for high-end graphics, the 'art' of software rendering hasn't completely died out. While early graphics cards were much faster than software renderers and originally had better quality and more features, it restricted the developer to 'fixed-function' pixel processing. Quickly there came a need for diversification of the looks of games. Software rendering has no restrictions because an arbitrary program is executed. So graphics cards reintroduced this programmability, by executing small programs per vertex and per pixel/fragment, also known as shaders. Shader languages, such as High Level Shader Language (HLSL) for DirectX or the OpenGL Shading Language (GLSL), are C-like programming languages for shaders and start to show some resemblance with (arbitrary function) software rendering.

Since the adoption of graphics hardware as the primary means for real-time rendering, CPU performance has grown steadily as ever. This allowed for new software rendering technologies to emerge. Although largely overshadowed by the performance of hardware rendering, some modern real-time software renderers manage to combine a broad feature set and reasonable performance (for a software renderer), by making use of specialized dynamic compilation and advanced instruction set extensions like SSE. Although nowadays the dominance of hardware rendering over software rendering is undisputed because of unparalleled performance, features, and continuing innovation, some believe that CPUs and GPUs will converge one way or another and the line between software and hardware rendering will fade.[2]

Software fallback[edit]

Wpf Software Vs Hardware Rendering

For various reasons such as hardware failure, broken drivers, emulation, quality assurance, software programming, hardware design, and hardware limitations, it is sometimes useful to let the CPU assume some or all functions in a graphics pipeline.

As a result, there are a number of general-purpose software packages capable of replacing or augmenting an existing hardware graphical accelerator, including:

- RAD Game Tools' Pixomatic, sold as middleware intended for static linking inside D3D 7–9 client software.

- SwiftShader, a library sold as middleware intended for bundling with D3D9 & OpenGL ES 2 client software.

- The swrast, softpipe, & LLVMpipe renderers inside Mesa work as a shim at the system level to emulate an OpenGL 1.4–3.2 hardware device.

- WARP, provided since Windows Vista by Microsoft, which works at the system level to provide fast D3D 9.1 and above emulation. This is in addition to the extremely slow software-based reference rasterizer Microsoft has always provided to developers.

- The Apple software renderer in CGL, provided in Mac OS X by Apple, which works at the system level to provide fast OpenGL 1.1–4.1 emulation.

Pre-rendering[edit]

Contrary to real-time rendering, performance is only of second priority with pre-rendering. It is used mainly in the film industry to create high-quality renderings of lifelike scenes. Many special effects in today's movies are entirely or partially created by computer graphics. For example, the character of Gollum in the Peter JacksonThe Lord of the Rings films is completely computer-generated imagery (CGI). Also for animation movies, CGI is gaining popularity. Most notably Pixar has produced a series of movies such as Toy Story and Finding Nemo, and the Blender Foundation the world's first open movieElephants Dream.

Because of the need for very high-quality and diversity of effects, offline rendering requires a lot of flexibility. Even though commercial real-time graphics hardware is getting higher quality and more programmable by the day, most photorealistic CGI still requires software rendering. Pixar's RenderMan, for example, allows shaders of unlimited length and complexity, demanding a general-purpose processor. Techniques for high realism like raytracing and global illumination are also inherently unsuited for hardware implementation and in most cases are realized purely in software.

See also[edit]

Software Vs Hardware Rendering Device

References[edit]

Software Vs Hardware Rendering Meaning

- ^'LIVE Design - Interactive Visualizations | Autodesk'. Archived from the original on February 21, 2014. Retrieved 2016-08-20.

- ^Valich, Theo (2012-12-13). 'Tim Sweeney, Part 2: 'DirectX 10 is the last relevant graphics API' | TG Daily'. TG Daily. Archived from the original on March 4, 2016. Retrieved 2016-11-07.